Relying on AI without losing yourself

Andrej Karpathy tossed out the phrase “intelligence brownout” at an event recently to describe what happens when AI systems go down. It’s one of those terms that sounds slightly ridiculous and yet weirdly spot‑on. Because I’ve felt it. Not a total blackout - I don’t suddenly forget how to code or write - but definitely a flicker. Productivity stutters, confidence wobbles, and I find myself staring at my screen like someone just unplugged a part of my brain.

The most recent reminder came on June 10, 2025, when ChatGPT went down for over 10 hours. Google searches for "OpenAI status" spiked in the US with over 500,000 queries. OpenAI’s own post-mortem says a routine OS update on cloud GPU hosts knocked many nodes off the network, pushing ChatGPT’s error rate to ~35% (API ~25%) at peak. Depending on who you ask, the disruption lasted about 10-15 hours.

Sure, AI makes us faster. But more quietly, it starts making calls we used to make. Brownouts reveal where those calls have drifted, and whether we can still operate when the lights dim.

What brownouts reveal

People love to say AI is just another tool. But that’s a lazy comparison. Calculators replaced arithmetic, compilers replaced translation, search replaced retrieval. Apart from automating a task, AI seeps into how you approach problems, how you weigh options, how you decide what matters. When it goes down (which will happen from time to time), yes you lose speed; but more importantly, you notice how much of your own judgment you’ve handed over without realising.

In the 2025 Stack Overflow survey, 84% of developers are using or planning to use AI tools, and 51% of pros say they use them daily.

The lazy dev’s dream (and nightmare)

Before AI coding assistants, debugging was this messy ritual: Google the error message, skim Stack Overflow threads, open twelve tabs of docs, copy‑paste until something half‑worked. Ugly, yes. But it forced you to actually understand what was happening. You had to learn, whether you liked it or not.

Enter AI: now I can just explain the issue in plain English. The model suggests fixes, writes the code, handles syntax, edge cases, boilerplate. I don’t need ten tabs open. I don’t even need to remember the exact method signature. It feels like having a senior dev permanently on call - patient, tireless, and very confident.

But a lot of the time, that “senior dev” is drunk. When I was learning frontend, I leaned hard on AI. I’d ask for a feature and get back 300‑line code dumps. I didn’t know enough to question it, so I shipped it. Weeks later, once I had a clearer handle on component architecture, state management, and performance budgets, I realised my codebase was packed with extra dependencies and messy patterns. Cleaning it up took forever. AI didn’t save me time; it just pushed the pain down the road. That’s a nightmare scenario. You get speed today at the cost of long-term debt you don’t even see piling up.

On the flip side, it’s also been a "teacher". I had no idea about small accessibility details - managing focus traps, announcing dynamic state changes - until the model pointed them out. That nudge opened up a corner of frontend craft I’d been skipping. It felt like a mentor dropping the exact UI detail I didn’t know I was missing.

So which is it - crutch or mentor? Honestly, in the most cliché way possible, it’s both. AI can make you smarter or lazier. It depends on whether you treat it as autopilot or as a co‑pilot. If you just accept whatever it spits out, you’ll atrophy. If you let it point the way and then dig deeper yourself, you’ll level up faster than you thought possible.

The cycle of dependency

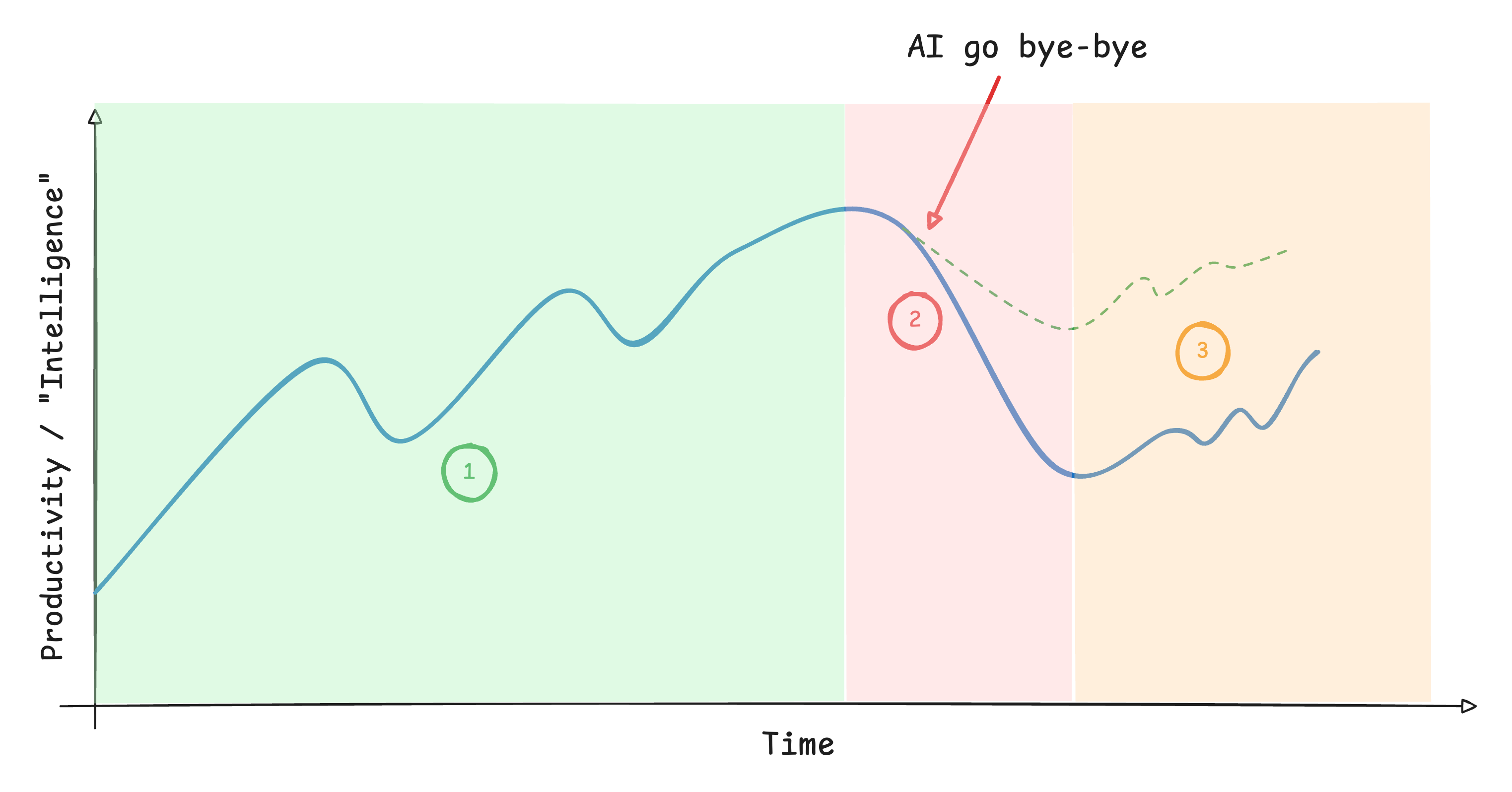

I see it less as a straight line and more like a wave. Productivity shoots up when you first plug AI into your workflow, then dips when you hit the messy side effects, then stabilizes once you learn how to manage it. And the cycle keeps repeating.

When I say “productivity” here, treat it as your yardstick: whatever you use to tell if the work is going well - shipping pace, how clean reviews feel, how little rework you do, or something else entirely.

Stage 1. At the start, AI just makes everything feel faster. You’re moving quickly, skipping over boilerplate, and shipping in days what would’ve taken weeks. But then reality shows up. The code is messy, the dependencies are bloated, and you don’t really understand why it works. Productivity slumps and not because you’ve lost the tool, but because you have to stop and untangle what it produced. That’s when you start learning the quirks of the model, the patterns you should accept, and the ones you should immediately rewrite. After that learning curve, your baseline actually shifts upward.

Stage 2. Right. Things look good again. Until your AI decides to take a day off. When the system you’ve been leaning on suddenly disappears, the drop isn’t just speed, it’s confidence. You realise how much of the “thinking” you’d quietly outsourced. The slump is steep because you don’t just have to keep coding; you have to rebuild your own decision‑making muscles. For some people, this drop lands them almost all the way back at Section 1. For others, it’s smaller but still painful. The severity of that cliff says a lot about how resilient (or fragile) your relationship with AI has become.

Stage 3. Eventually you recover. You adapt to the AI outage, rebuild some of your own intuition, and move forward again. It looks like the “old” cycle of programming: progress, stall, debug, repeat. But it’s not a full reset as you now carry whatever new skills you picked up during the outage. That means your long‑term baseline keeps trending upward, even if the slope is jagged.

There’s another way to frame this graph. Imagine a dotted line running just below the blue curve after the outage: an ideal slump. In that version, productivity still drops, but not catastrophically. The outage slows you down, but it doesn’t knock you back to square one. That dotted line is the resilience path - the one where you’ve practiced enough manual reps and built enough of your own judgment that AI is a booster, not a crutch.

Fragility is not just slower typing

If Cursor vanished tomorrow, I’d still write code - just slower, crankier, and with more caffeine. But the real risk isn’t slower typing; it’s when you stop trusting your own judgment without the tool. If every architecture decision, bug fix, and naming choice needs AI to validate it, the outage isn’t just inconvenient. It’s destabilising.

Developers don’t lose productivity first; they lose confidence. Confidence is what lets you tackle unfamiliar problems, make trade‑offs, and actually own your work. Brownouts sting not because we type slower, but because we’ve quietly been renting judgment instead of building it.

We used to joke about hallucinations. GPT-3.5 would invent APIs, GPT-4o would get overconfident, and you could usually spot the cracks. But as each generation improves - GPT-5, Claude Sonnet/Opus 4, whatever comes next - the failures aren’t always obvious anymore. The code compiles, the explanation sounds airtight, the trade-off analysis feels reasonable. That’s exactly what makes the dependency more fragile. You don’t notice the flaws piling up because they’re subtle, hidden behind the polish.

And here’s the deeper question: what does that do to our identity as developers? A craft is partly defined by the parts you can’t outsource. If debugging intuition, naming, problem decomposition - the actual thinking parts - are consistently handed to AI, then what exactly is “yours” in the work? When the lights flicker, you notice which parts of your craft you still own, and which parts you’ve quietly outsourced.

The danger isn’t that AI literally steals our intelligence. The danger is that we confuse its convenience with our capability. We stop flexing our own judgment because the machine is so damn quick at flexing for us. And then, when the machine stutters, we realise we’ve let parts of our identity atrophy.

The cultural divide

Not everyone even notices these brownouts. I know plenty of people who barely touch AI tools. For them, outages are irrelevant. At most, they might complain when a service they use hiccups because some hidden AI dependency failed.

Meanwhile, AI-native users, i.e., devs glued to Copilot, students writing essays with ChatGPT, marketers drafting campaigns - feel like their entire workflow just flatlined. That gap is striking. For one group, brownouts are a mild inconvenience. For the other, they’re existential.

Picture an old‑school sysadmin who still writes Bash from muscle memory: AI downtime is a non‑event. Contrast that with a junior dev who’s never written a non‑AI‑assisted function: the brownout is a panic attack. The same outage exposes a gap in how people experience their own skills. Among seasoned devs, ‘highly trust AI’ is ~3% , while ‘highly distrust AI ’ hits ~20% . And that gap will shrink as AI seeps into everything-even people who “don’t use AI” will feel it indirectly.

Resilience playbook

Having used AI-assisted coding tools for a few years, I’d recommend these rules. But honestly, I often ignore them myself and just “go with the vibes”:

- Decide first, ask AI second. Draft your own plan, API shape, or naming before you prompt.

- Manual reps on basics weekly (no AI). Write a small function, debug with docs only.

- Write a one‑paragraph rationale before generating code: why this approach, what trade‑offs.

- Treat AI output like a PR: diff it, test it, replace boilerplate with your patterns.

- Keep a debt log for AI‑introduced dependencies and patterns to revisit.

In other words: habits that keep you near the dotted line, not the cliff.

Living with the flicker

I don’t think AI dependency is bad. It’s inevitable. Humans have always extended themselves with tools. What’s different now is that we’re extending not just our muscles or memory, but our reasoning. That’s why outages feel personal; like someone dimmed the lights in your head.

Dependency only turns toxic when we stop thinking for ourselves. The point isn’t to shun AI or pretend to be some manual‑only purist. The point is to draw the line: let AI accelerate the grunt work, but don’t outsource the judgment that defines your craft. Brownouts are reminders to check where that line is, and whether it’s slipped.

If you can’t code without AI, are you really coding - or just typing prompts? I don’t have a grand answer. What I do have are a handful of habits and a willingness to be slower on purpose now and then. That’s enough to make sure my name stays on the work. The outage just flickers the lights long enough to remind me: redraw the line, before the tool redraws it for me.